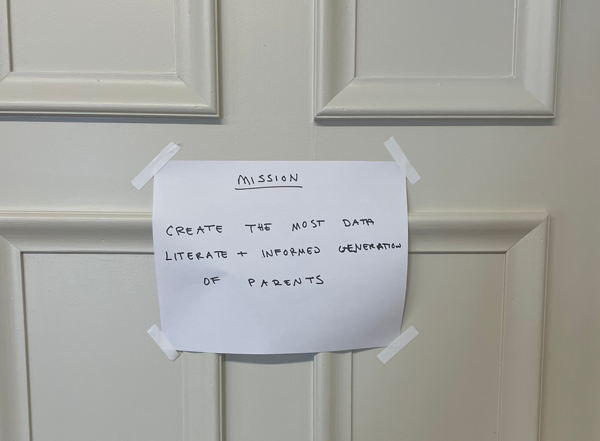

The ParentData mission statement (it’s on my door!) is “To create the most data-literate generation of parents.” The other day, someone on my team said to me a version of You know, we talk about this a lot. But I realized I’m not exactly sure what you mean by data literacy. What do you want people to be able to do?

It’s a good question! Today is an attempt at an answer. I’m not the only person in the world who uses the phrase “data literacy.” But what does that mean, concretely?

It’s easiest to start with what it doesn’t mean.

One thing it doesn’t mean is that I’m aiming to train everyone to do the job I do. For one thing, I wouldn’t have a job then. But more importantly: there is value in specialization. I spent a long time in graduate school and in my professional career working on how we learn facts from data. It is my job; you all have your own jobs! Training people to be data-literate does not mean training them all to be econometricians.

A corollary of this is that data literacy isn’t about having everyone “do their own research” à la Kyrie Irving. It isn’t about training people to distrust expertise.

For me, increasing data literacy means training people to engage better with expertise — to ask the right questions and understand the context. It’s about helping people process “panic headlines” in a more nuanced and less panicky way.

In doing this, I see four key data-literacy lessons. Here they are — and, by extension, here’s what I want you to learn.

Lesson 1: Where does data come from?

We see facts based on data all the time.

- The unemployment rate is 3.6%

- 42.4% of adults have obesity

- The median household income in the U.S. in 2021 was $70,784

These facts aren’t handed down by some all-powerful data wizard in the sky. They’re based on information collected from people — in the cases of those facts above, information collected in surveys. Of course, in a sense we must all know that, but having talked to many people about this type of information, it has become clear to me that we don’t think much about the implications of where data comes from.

The question of where data comes from in any given setting is important, however, since different approaches to data collection yield different issues and interpretations. I do a lot of work with data on individual diets. Most of that data is based on individuals’ reports of what they eat — when you recognize that, you realize that it must not be that accurate. We have detailed dietary data from, say, laboratory studies that carefully control what people eat. That data is really accurate, but also not at all representative.

When we know where data comes from, we can also better understand that it comes with noise. The unemployment rate in the U.S. is based on data from surveys of a subset of individuals; these are randomly sampled, so we expect to get the number right on average, but the samples aren’t universal, which means they come with statistical error. When the unemployment rate moves from 3.6% to 3.7%, that may well just reflect different errors, not a real change.

Moral of the lesson: Data doesn’t come from a magic data fairy, and understanding where it does come from is the first part of data literacy.

Further reading on this: Where Does Data Come From?

Lesson 2: Correlation is not causality

I said at the top here that I do not intend to teach people econometrics, but this second lesson aims to teach just one important concept: the difference between correlation and causation.

If you read this newsletter often, you’ll recognize this as a lesson I repeat over and over. If you’re new, here’s the gist.

Often, when we look to learn from data, we are looking to learn the relationship between some behavior or action (the “treatment”) and something we care about (the “outcome”). For example: the relationship between eating chia seeds (the treatment) and heart health (the outcome). An easy thing to do with data is to ask whether two variables are correlated — literally, this is just asking whether they move together. Do people who eat more chia seeds have better heart health?

This kind of link is a correlation. It is not necessarily causal. Seeing that chia seeds and heart health move together doesn’t tell us that if you started eating chia seeds, your heart health would improve. The reason is that there are many other characteristics of people who eat chia seeds that differ from those of people who do not (more education, more income, exercise more, follow weird health fads, etc.). Seeing a correlation between chia seeds and heart health doesn’t tell you if it is the chia seeds or if it is all the other things that also are related to heart health that drive the relationship.

A huge share of the “panic headlines” that I write and talk about are a result of people observing a correlation and interpreting it as causal. Breastfeeding and test scores. Screen time and behavior problems. Anything at all about diet and health.

If we want to learn about causal relationships, the best way to do this is with data in which the treatment is randomized, so we can be confident that the groups that are treated and untreated are otherwise similar. It is sometimes possible to generate causal relationships from non-randomized data, but it usually requires something special about the setting — a source of randomness in treatment, even if it is not explicit.

The core data-literacy message here is to ask critically whether a relationship seems likely to be causal or if it is likely just a correlation. Think about the treatment — the behavior or action that is being evaluated. Does it seem likely that that treatment is effectively random across people? If not, you probably have a correlation and not a causality problem.

Further reading: Why I Look at Data Differently, Coffee Definitely Either Kills You or Makes You Live Forever, My interview with Bapu Jena

Lesson 3: Be Bayesian

When (traditional) media reports on studies, there is a breathless “A new study shows…!” tone that often accompanies the reports. It is easy to come away with the general impression that every new study should replace what we had before.

This is not true.

There are some cases where a new study is so much better than what has come before — it’s randomized where we didn’t have randomized data in the past, for example — that we do want to sit up and pay attention. But that’s not common. Most of the time, new studies are building on top of what came before (sometimes making the same mistakes) and should be considered as part of a broader literature.

A key part of data literacy is, when faced with a new set of results, asking what came before that this builds on. By extension, if we see a new result that contradicts the existing literature, it’s important to be thoughtful about how much we change our beliefs. If the new data is in some way much better than older data, it might make sense to change our beliefs substantially. But more commonly, new data isn’t necessarily better, and we should perhaps let it move our beliefs only a little (or not at all).

This idea of updating what we believe based on new data, but not throwing away the old data, is what I mean by “Be Bayesian,” which refers to an approach to statistics that mirrors this.

Further reading: Bayes’ Rule Is My Faves Rule, Prenatal Tests and False Positives

Lesson 4: We should democratize data

My final lesson on data literacy is more of a call to action.

Part of being data-literate is knowing what kind of data should be possible, and pushing the powers that be to actually collect it.

During the pandemic, I and others spent many hours putting together COVID-related data. In my case, we focused on school data, and much of my work collecting data on COVID outbreaks in schools was motivated by the fact that others, including government agencies, were not collecting this information. I was glad we were able to partially step in, but I remain frustrated and angry that more wasn’t done at an official level to get data that would have helped with decision-making.

In another domain: We complain in this newsletter about the need for better data on breast milk storage. The CDC has guidelines about milk storage that do not appear to be rooted in any actual evidence. I’ve said many times that we need better data on this.

The more people we have calling for these kinds of data, the more likely it is that someone will produce them. I firmly believe that if more people had pushed the Department of Education during the pandemic to collect data on schools, more would have been done. One key barrier to this advocacy is a lack of understanding of what kind of data is even possible.

This, then, is the last data-literacy lesson: Being data-literate means knowing what data is possible. It’s the ability to say, hey, CDC, you could totally set up a project to test bacterial loads in breast milk. And if enough of us shout this kind of thing, maybe it will happen.

Further reading: Breast Milk Storage, COVID-19 School Data Hub, Updates on state test score recovery

So there it is, four key building blocks of data literacy that ParentData strives for. It’s on the door, it’s down on paper — now all we need to do is get moving on informing everyone.

Community Guidelines

Log in